Focus

Computers elaborating informationPrincipal Metaphors

- Knowledge is … ever-expanding scope of possible functioning

- Knowing is … recognizing forms and recommending actions (enabled and constrained by current base of analyzed information)

- Learner is … a computer algorithm

- Learning is … organizing, analyzing, connecting, extracting, and logically extending information

- Teaching is … self-teaching through recursive learning cycles

Originated

1980sSynopsis

Deep Learning, which is a sub discourse to Machine Learning, refers to iterative machine learning algorithms in which each layer of non-linear processing uses output from the previous layer to form a hierarchy of concepts. While loosely based on the processing and communication patterns of neurological patterns, machine structures and functions are entirely different from biological brains. Deep Learning algorithms have been useful for speech recognition, computer vision, and social network filtering. They’ve also been applied to generate complicated mathematical proofs, play sophisticated games, and generate advanced models of complex natural systems.Commentary

Deep Learning relies on huge databases, as a system “learns” what is essential for a specific concept as it gradually identifies common and essential elements across many, many examples. Human learning (and other models of Machine Learning) works quite differently, often arriving at critical discernments from very few experiences:- One-Shot Learning – becoming able to make a critical discernment or identification on the basis of a single example/experience/encounter

- Few-Shot Learning (Low-Shot Learning) – becoming able to make a critical discernment or identification on the basis of a just a few examples/experiences/encounters

- Active Learning – In the context of Machine Learning, Active Learning refers to strategies aimed at maximizing performance while minimizing trials/samples. (Note: Should not be confused or conflated with Active Learning.)

- Deep Active Learning – a mash-up of Deep Learning and Active Learning (see above), aimed at rendering Deep Learning more efficient (Note: There’s also a “Deep Active Learning” associated with Deep vs. Surface Learning.)

- Transformer (Google, 2010s) – a form of Deep Learning modelled on brain networks that is designed to discern the underlying structure of any massive data set through distinguishing the relative importance and the strengths of connections among different parts of the data

Authors and/or Prominent Influences

Rina Dechter; Igor AizenbergStatus as a Theory of Learning

Deep Learning is a theory of learning. It draws on and contributes to understandings of complex (i.e., nested, recursively elaborative, situated) cognitive systems. Moreover, although it focuses on the use of digital computers to organize, analyze, connect, and logically extend what is known, such activities are entirely in the realm of human-generated learning and knowing.Status as a Theory of Teaching

Deep Learning is not a theory of teaching.Status as a Scientific Theory

Deep Learning meets the requirements of a scientific theory of learning. Significantly, it has a large and rapidly expanding evidence base.Subdiscourses:

- Active Learning

- Deep Active Learning

- Few-Shot Learning (Low-Shot Learning)

- One-Shot Learning

- Transformer

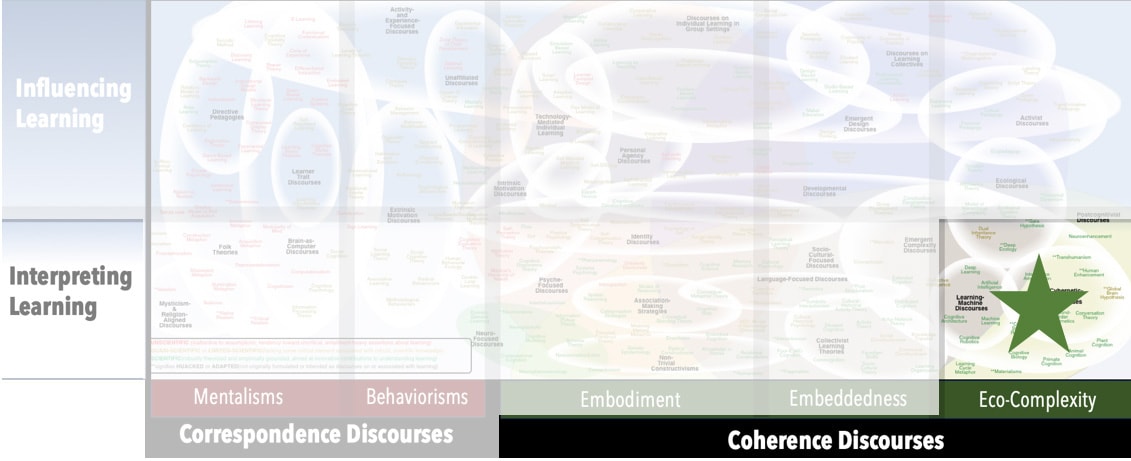

Map Location

Please cite this article as:

Davis, B., & Francis, K. (2024). “Deep Learning” in Discourses on Learning in Education. https://learningdiscourses.com.

⇦ Back to Map

⇦ Back to List