AKA

AI

Machine Intelligence

Focus

Computers able to provide novel solutions to complex problems- Knowledge is … ever-evolving repertoire of possibility

- Knowing is … functioning

- Learner is … an “intelligence” (often characterized in terms of a “mind”)

- Learning is … expanding the repertoire of possibility

- Teaching is … challenging

Originated

1950sSynopsis

Popularly, Artificial Intelligence (AI) is understood in terms of machines mimicking human cognition – learning, solving problems, and so on. It is more properly understood as a domain of computer science focused on devices that are able to take some level of agency in achieving novel goals. Beyond that detail, the scope of AI is disputed, in part because “novel goals” is a moving target, as once-unimaginable feats become routine. Commonly mentioned aspects include abilities to collect relevant information about the environment, draw inferences based on that information, and apply those inferences flexibly – all of which are associated with capacities to learn, perceive, interpret, classify, test, compare, select, reason, plan, and reflect. Categories of AI include:- Weak AI (Narrow AI) – Artificial Intelligence research focused on specific tasks within a closed system (i.e., in which all the possibilities can be specified). Subtypes include:

- Reactive AI – Artificial Intelligence systems that do not learn on their own – that is, that are “hard” programmed to generate identical outputs for identical inputs

- Strong AI – sometimes used as a synonym to Artificial General Intelligence (see below), and sometimes used in a narrower sense to refer to a type of Artificial General Intelligence that achieves consciousness/sentience

-

Artificial General Intelligence (AGI; Full AI; General Intelligent Action) – a currently (as of early-2023) aspired-to level of Artificial Intelligence that can engage with any cognitive task in a manner that meets or exceeds the abilities of a human

- General AI – Artificial Intelligence research that addresses novel and/or expansive tasks that are located in open systems, requiring some manner of invention and/or innovative transfer from another situation

- Generative AI (Artificial Imagination) – Artificial Intelligence systems that can create new textual, auditory, visual, and/or coding content that is experienced by humans as original and authentic

- Limited Memory AI – Artificial Intelligence systems that extend the capacities of Reactive AI (see above) by combining preprogrammed structures with novel information (e.g., incoming data about the current situation)

- Theory of Mind AI – Artificial Intelligence systems that can participate in meaningful conversations with humans, enabled in part by abilities to recognize, remember, and manifest emotions fitted to the situation

-

There is emerging consensus that each of the above has been achieved in non-trivial ways. Types of General AI that are on the current horizon of possibility include:

- Self-Aware AI – Artificial Intelligence systems that have a level of Consciousness (for a definition, see Cognitive Science)

Prominent constructs and subdiscourses include:

- Cognitive Computing – One strategy used to cut through the clutter of meanings for Artificial Intelligence is to distinguish it from Cognitive Computing. According to advocates of this tactic, AI is defined simply in terms of using technologies to augment human intelligence, to solve complex problems, and to meet complex demands in real time. In contrast, Cognitive Computing is interesting in mimicking human action and reasoning – that is Cognitive Computing refers to those AI efforts that limit themselves to thinking strategies humans would use.

- Computational Creativity (Artificial Creativity; Creative Computation; Creative Computing; Mechanical Creativity) (2010s) – a blending of Artificial Intelligence, Cognitive Psychology, and Neuroscience, aiming to enhance creativity through two complementary foci: (1) to simulate and replicate human creativity using computers and (2) to better understand human creativity

- Evolutionary Computation – a branch of Artificial Intelligence that employs principles of Universal Darwinism, by iteratively generating sets of possible solutions to defined problems and subjecting those possible solutions to evolutionary pressures (i.e., metaphorically speaking, either culling possible solutions or compelling them to adapt by testing their fitness in relation to the defined problem). Relevant constructs include:

- Genetic Algorithm – the name of the search heuristics (i.e., programs) used in Evolutionary Computation

- Expert System (Knowledge-Based System) – a program that appears to mimic the performance of an expert in solving a problem, based on the strategy of being provided with all possible actions within a domain along with “if → then” rules to sift through those actions

- Knowledge Representation and Reasoning (KRR; KR&R; KR2) (Brian Smith, 1980s) – in Artificial Intelligence, a field that combines insights from Psychology and Modes of Reasoning in efforts to devise modes of formatting information in ways that computers can use to solve complex tasks

- Neural Network (Artificial Neural Network) – a reference to combinations hardware and software designed to simulate brain functioning (See The Neural Network Zoo for a typography of Neural Networks.)

- Organoid Intelligence (Lena Smirnova, Brian Caffro, 2020s) – a form of “biological computing” involving organoids (cultured human brain cells) and brain–machine interface technology. OI research is not only touted to be a promising avenue strand of Artificial Intelligence, but also to have much to offer research into human learning and well-being.

- Prompt Engineering (2020s) – the process of designing a sequence of prompts to attain a desired output from an AI system

- Soft Computing – a reference to those algorithms, logics, and structures designed to tolerate levels of uncertainty or imprecision Examples include Fuzzy Logic (under Modes of Reasoning), Genetic Algorithms (see above), and Neural Networks (see above).

- Hard Computing – a reference to those algorithms, logics, and structures designed to support quests for precise calculations and provably correct problem solutions

Perhaps more than any other discourse mentioned on this site, Artificial Intelligence attracts speculation on the future of humanity's relationship with technology. Examples that might be construed as related to discourses on learning include:

- Intelligence Explosion – a hypothesized point in the development of Artificial Intelligence at which AI is able to generate new, faster, and more intelligent AI, which in turn generates new, faster, and more intelligent AI, and so on

- Superintelligence – a level of Artificial Intelligence that markedly exceeds Artificial General Intelligence (see above) and, when achieved, may be capable of explosive self-directed growth

- Technological Singularity (The Singularity) (Ray Kurzweil, 2000s)– a hypothesized point in the development of technology at which humanity loses the ability to control (or anticipate) its evolution, leading to unimaginably greater intelligence. Some have more recently defined the Singularity as the moment at which Artificial Intelligence surpasses human control.

- Turing Test (Imitation Game) (Alan Turing, 1950s) – an Artificial Intelligence test involving a dialogue between a human and a machine. It is deemed to be passed if human interlocutors are unable to determine whether they are engaging with a machine.

- AI Agent (Bot) (2010s) – a decision-making system that performs a specific task without human supervision or intervention. Multiple subclassifications of AI Agents have been proposed, according to perceived capability and autonomy. A popular one includes five types: Simple Reflex Agent, Model Based Agent, Goal Based Agent, Utility Agent, and Learning Agent.

- Chatbot (Chatterbot) (Michael Mauldin, 1990s) – originally: an online software application designed to simulate a human conversational partner for a narrow, well-defined, and scripted purpose. The term is now being applied more widely to include Dialogue Systems (see below).

- Dialogue System (Conversational Agent) – a computer system designed to interact with humans for diverse, unscripted, and nontrivial purposes, including posing novel queries, offering innovative ideas, and exchanging meaningful information. By the early 2020s, Dialogue Systems were emerging that could pass most versions of Turing Tests, alongside assertions that they were including in sophistication by an order of magnitude each year. Associated constructs include:

- Large Language Models – AI systems that are trained to recognize patterns of word usage by using complex algorithms to comb through massive datasets, enabling a sophisticated mimicking of contextually appropriate natural language. Examples include:

- ChatGPT (precursor: GPT-3, Generative Pretrained Transformer 3) (OpenAI, 2022) – one of the first Dialogue Systems capable of taking part in Turing-Test-passable conversations and generating original and acceptable academic writings

- LaMDA (Language Model for Dialogue Applications) (Google, 2022) – one of the first Dialogue Systems capable of passing most versions of the Turing Test, partly notable for the fact that one of its developers claimed it to be sentient (without evidence)

- Large Language Models – AI systems that are trained to recognize patterns of word usage by using complex algorithms to comb through massive datasets, enabling a sophisticated mimicking of contextually appropriate natural language. Examples include:

- Algerism (unknown, 2020s) – plagiarism that is conducted through, enabled by, or assisted by Artificial Intelligence

- Algorithmic Bias (2000s) – a reference to systematic errors in AI systems that might generate distorted and/or unjust results. Related constructs include:

- Data Poisoning (various, 2020s) – the manipulation of a Large Language Model to deliver false or skewed results through the deliberate inclusion of biased or inaccurate data in its training set

- Paperclip Problem (Paperclip Maximizer) (Nick Bostrom, 2010s) – a thought experiment focused on calamitous ethical and ecological consequences of a worldwide AI system that somehow comes to construe paperclip-manufacturing as its highest priority

- Stochastic Parrot (Emily Bender, 2020s) – meaning something like “random repetition,” a denigrating term coined to describe the workings of Large Language Models, which is to assemble sentences from phrases mined from large data sets (as opposed to creating meaningful statements)

- Algorithmic Justice (Joy Buolamwini, 2010s) – a commitment to mitigating possible harms of those AI systems that are trained on large data sets by monitoring, regulating, and addressing biases that might be present in and picked up from those sets

-

Algorithmic Transparency (Algorithmic Accountability) (Nicholas Diakopoulos, Michael Koliska, 2010s) – the assertion that the principles by which AI systems make decisions should be explicit and clear to those who use, oversee, and/or are affected by those systems

- Critical AI (Lauren Goodlad, 2020s) – a blend of Critical Theory (under Critical Pedagogy) and Artificial Intelligence, that encourages ongoing interrogation of the impacts of AI, especially in relation to differentiated benefits and hidden consequences

- Foom (Eliezer Yudkowsky, 2000s) – a term proposed to refer to the possibility of a sudden and potentially catastrophic explosion of AI capabilities that might be unleashed when AI achieves the capacity to improve itself

- Hallucination (Andrej Karpathy, 2010s) – an AI-generated response that is fictional but presented as factual

- Skills Gap (AI Skills Gap) (Bernard Marr, 2020s) – a lack of skilled AI talent, most apparent across business and government. This Skills Gap was first noted as AI systems (in particular, Large Language Models) moved toward ubiquity in the early-2020s – and, of course, education was identified as both the problem and solution.

- Futurepedia.io – a regularly updated directory of AI tools

Commentary

Since the first inklings of AI in the 1950s, criticisms of and anxieties have tended tend to revolve around its potential unintended and unanticipated consequences, with the most extreme worries focused on sentient machines that would merit the same rights as humans, thinking machines that might assume governance of humans, and super-intelligent machines that might wipe out humans. That said, it was not until the early 2020s that educators began to engage critically with the topic, as ChatGPT (see above) and other systems compelled teachers and researchers to grapple with technologies that could generate passable essays and literature reviews. An interesting split occurred at this historical moment, with many commentators looking backward for means to detect, side-step, or otherwise prevent the use of AI in formal education, while other commentators engaged in forward-looking conversations about educational futures that embrace Artificial Intelligence.Authors and/or Prominent Influences

Warren McCullouch; Marvin Minsky; Walter Pitts; Herbert Simon; Alan TuringStatus as a Theory of Learning

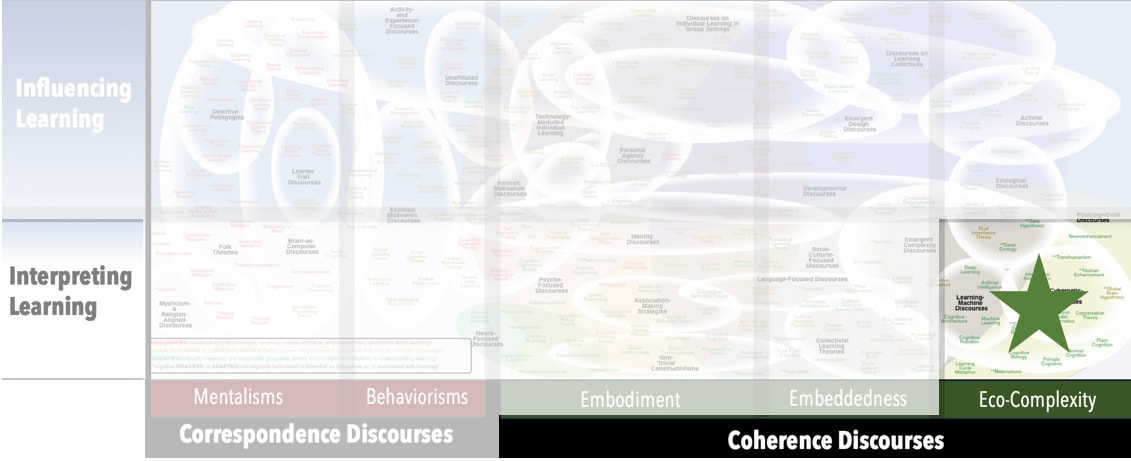

Artificial Intelligence is a domain of research, but it can also be construed as positing, investigating, and confirming a theory of learning. In the process, AI has afforded insights into and human learning, especially around the untenability of the metaphors and dualisms that are typical of popular-but-untenable Correspondence Discourses.Status as a Theory of Teaching

Artificial Intelligence is not a theory of teaching.Status as a Scientific Theory

AI is a scientific domain – but, that said, it has had a checkered history since being founded in the 1950s. Spurred by early successes in programming machines to perform tasks that the programmers themselves found difficult (e.g. logic, advanced mathematics), confident predictions were made that machines would soon surpass humans. Against those expectations, there was surprisingly little progress in the first 50 years of AI research, apart from the slow realization that competencies that humans find hard (e.g., logic, chess) are often easy to program, but competencies that are routinely mastered by young children (e.g., recognizing faces, learning a language) are very difficult to program. A major breakthrough came in the late-1990s, when assumptions rooted in Correspondence Discourses, such as interpreting thought in terms of processing inputted information and symbolically encoded internal representations, were abandoned and replaced with evolution-based, exploration-oriented, and emergentist (e.g., iterative dynamics, co-entangled nested systems, swarm tactics) notions associated with Coherence Discourses.Subdiscourses:

- AI Agent (Bot)

- Algerism

- Algorithmic Bias

- Algorithmic Justice

- Algorithmic Transparency (Algorithmic Accountability)

- Artificial General Intelligence (AGI; Full AI; General Intelligent Action)

- C-Test (Complexity Test)

- Chatbot (Chatterbot)

- ChatGPT (precursor: GPT-3, Generative Pretrained Transformer 3)

- Cognitive Computing

- Computational Creativity (Artificial Creativity; Creative Computation; Creative Computing; Mechanical Creativity)

- Critical AI

- Data Poisoning

- Dialogue System (Conversational Agent)

- Evolutionary Computation

- Expert System (Knowledge-Based System)

- Foom

- Futurepedia.io

- General AI

- Generative AI (Artificial Imagination)

- Genetic Algorithm

- Hallucination

- Hard Computing

- Intelligence Explosion

- Knowledge Representation and Reasoning

- LaMDA (Language Model for Dialogue Applications)

- Large Language Models

- Limited Memory AI

- Neural Network (Artificial Neural Network)

- Organoid Intelligence

- Paperclip Problem (Paperclip Maximizer)

- Prompt Engineering

- Reactive AI

- Self-Aware AI

- Skills Gap (AI Skills Gap)

- Soft Computing

- Stochastic Parrot

- Strong AI

- Superintelligence

- Technological Singularity (The Singularity)

- Theory of Mind AI

- Turing Test (Imitation Game)

- Weak AI (Narrow AI)

Map Location

Please cite this article as:

Davis, B., & Francis, K. (2025). “Artificial Intelligence” in Discourses on Learning in Education. https://learningdiscourses.com.

⇦ Back to Map

⇦ Back to List