Focus

Systematic lapses in reasoning and judgment … that are often usefulPrincipal Metaphors

- Knowledge is … scope of possible action and interpretation

- Knowing is … acting on appropriate biases

- Learner is … a largely nonconscious actor

- Learning is … developing biases

- Teaching is … interrupting biases

Originated

1970sSynopsis

A Cognitive Bias is a systematic pattern of thinking and/or acting that is rooted in either uncritical associations or bad judgment, but that nonetheless feels “right” to the person. A Cognitive Bias can be manifest as a perceptual distortion, an inaccurate assessment, an illogical interpretation, or other irrational response. The prevailing view is that Cognitive Biases are mental “short cuts” which, in the right contexts, can lead to efficient and effective action – but that, inappropriately applied, can be constraining or damaging. Of note, a Cognitive Bias is distinct from a Heuristic:- Heuristic (Cognitive Heuristic; Heuristic Technique) (Herbert A. Simon, 1950s) – a practical, experience-based (i.e., neither logical nor generalizable) means to solve a problem that usually, but not always, offers an efficient route to a solution

- Cognitive Bias Codex (Buster Benson, John Manogian, 2010s) – is chart in which all of the Cognitive Biases that are listed in Wikipedia (as of 2018) are classified, along with some Heuristics (see above). The categories (and the number of biases and heuristics included in each) are:

- What should we remember?

- We store memories differently, based on how they were experienced (6)

- We reduce events and lists to their key elements (13)

- We discard specifics to form generalities (6)

- We edit and reinforce some memories after the fact (6)

- Need to act fast

- We favor simple-looking options and complete information over complex, ambiguous options (10)

- To avoid mistakes, we’re motivated to preserve our autonomy and status in a group, and to avoid irreversible decisions (6)

- To get things done, we tend to complete things we’ve invested time and energy in (13)

- To stay focused, we favor the immediate, relatable thing in front of us (3)

- To act, we must be confident we can make an impact and feel what we do is important (21)

- Too much information

- We notice things already primed in memory or repeated often (12)

- Bizarre/funny/visually-striking/anthropomorphic things stick out more than non-bizarre/unfunny things (6)

- We notice when something has changed (8)

- We are drawn to details that confirm our own existing beliefs (13)

- We notice flaws in others more easily than flaws in ourselves (3)

- Not enough meaning

- We find stories and patterns even in sparse data (13)

- We fill in characteristics from stereotypes, generalities, and prior histories (12)

- We imagine things and people we’re familiar with or fond of as better (9)

- We simplify probabilities and numbers to make them easier to think about (9)

- We think we know what other people are thinking (6)

- We project our current mindset and assumptions onto the past and future (14)

- What should we remember?

- Anchoring Effect (Muzafer Sherif, 1950s) – one’s tendency to weight estimates, judgments, and/or opinions in the direction of an initial piece of information

- Approximation Bias (Samuel Gershman, 2020s) – the suggestion that humans take nonconscious mental shortcuts when making observations, due to limitations in cognitive resources. Approximation Bias is argued to be one of two fundamental principles (along with Inductive Bias) that govern human intelligence.

- Authority Bias (Authority Misinfluence) – a Cognitive Bias that leads people to follow the orders of authority figures, even when those orders contradict their own beliefs

- Availability Bias (Availability Heuristic; Availability Misweighing) – a mental shortcut that leads people to overvalue information that is easily recalled

- Barnum Effect (Forer Effect) (Bertram Forer, 1950s) – one’s tendency to believe or embrace non-specific and vague statements, such as those provided in horoscopes and fortune cookies, as highly accurate and deeply meaningful

- Confirmation Bias – the tendencies to seek out and embrace information that supports one’s established convictions and to ignore or reject evidence that contradicts such convictions

- Curse of Knowledge (Curse of Expertise) (Colin Camerer, George Loewenstein, 1980s) – the tendency to assume that the one with whom one is communicating has adequate background knowledge to understand

- Effect Effect – variously defined, referring to either: (1) the manner in which simply applying the label of “effect” to an observation can give it instant legitimacy; (2) the manner in which a labeled effect can influence subsequent observations of a specific phenomenon; (3) generalized responses to the concept of “effects” in academic or popular settings

- Expectation Bias – when prior beliefs or expectations influence perception, interpretation, or outcomes. Expectation Bias affects judgments in research, medicine, and daily life, leading one to see what one expects rather than what is objectively true.

- Hawthorne Effect (Elton Mayo, 1930s) – the tendency to adjust one’s activities (not necessarily deliberately) when one is aware of being observed

- Illusion of Information Adequacy (Hunter Gehlbach, 2020s) – the assumption that one’s current knowledge is sufficient to understand an immediate situation

- Illusion of Knowing (Ann Brown, 1970s) – when one is convinced one understands when one does not, owing to a lack of awareness of (or ignorance about) what one does not know

- Illusory Superiority (Above-Average Effect; Lake Wobegon Effect; Leniency Error; Primus Inter Pares Effect; Sense of Relative Superiority; Superiority Bias) (David Myers, 1970s) – a tendency to overestimate one’s abilities and personality traits in relation to those of others. Specific types of Illusory Superiority include:

- Dunning–Kruger Effect (Overconfidence Effect) (David Dunning, Justin Kruger, 1990s) – the tendency of those with limited expertise in a specific domain to overestimate their knowledge or skill in that domain. (Note: Some definitions include the complementary notion, whereby experts underestimate themselves. See also Conscious Competence Model of Learning.)

- Impostor Syndrome (Impostor Phenomenon; Impostorism) (Pauline Clance, Suzanne Imes, 1970s) – the feeling of being a fraud, typically rooted in a lack of confidence in one’s qualifications and abilities in relation to a role or situation

- Implicit Bias (Implicit Stereotype; Implicit Theory; Unconscious Bias) – nonconcious acts of attributing particular characteristics or habits to members of an identifiable group (distinguished, e.g., according to sex, gender, race, nationality, accent, class, etc.), typically associated with ascribing negative judgments

- Inductive Bias (Samuel Gershman, 2020s) – the suggestion that humans prefer or are predisposed to particular expectations or hypotheses, which orient subsequent observations and frame interpretations. Inductive Bias is argued to be one of two fundamental principles (along with Approximation Bias) that govern human intelligence.

- Lollapalooza Effect (Charli Munger, 2010s) – the amplified effect of Cognitive Bias when multiple biases layer and interact

- Peak–End Effect (Peak–End Rule) (Daniel Kahneman, Barbara Fredrickson, 1990s) – the suggestion that one typically recalls and judges an experience based on its most intense moment (“peak”) and its conclusion (“end”) – as opposed to an overall or average impressionof the entire experience

- Stability Bias – an indefensible confidence in the stability of one’s memory that contributes to errors in judgment and prediction

- Debiasing – technically, any reduction of bias. The term is usually applied to deliberate efforts to become more aware of Cognitive Biases and to develop thinking habits and decision strategies that are rooted in sound reasoning and available evidence. The three main strategies for Debiasing are Nudging (see Behavior Change Methods), changing Incentives and/or Rewards (see Extrinsic Motivation Discourses and Drives, Needs, & Desires Theories), and training. The last of these includes:

- Training-in-Bias Techniques (Implicit Bias Training; Unconscious Bias Training) – any program or technique designed to expose and influence prejudices that infuse automatic patterns of thinking and acting

- Training-in-Rules Techniques (R.P. Larrick, 2000s) – any program or technique that aimed at improving abilities and predispositions to think critically – that is, rely on sound Modes of Reasoning and available evidence – when contemplating decisions and actions

Cognitive Biases are often confused or conflated with the broader category of Tendencies:

- Tendencies (Behavioral Tendencies; Psychological Tendencies) – general inclinations toward specific behaviors or thought processes. Tendencies may or may not be rooted in Cognitive Biases. Examples include:

- Contrast-Misreaction Tendency – the Tendency to focus on differences or changes in phenomena, rather than their absolute magnitudes, thus leading to missed trends and poor decisions

- Deprival-Superreaction Tendency (Deprival Superreaction) – the Tendency toward intense reaction to losing something or the threat of losing something

- Excessive Self-Regard – the Tendency to overestimate one’s abilities, especially when there’s little experience or knowledge about the subject. Of associated with the Dunning–Kruger Effect (see above). It can lead to overconfidence, narcissism, and an inability to admit mistakes.

- Inconsistency Avoidance – the tendency to avoid changing habits to avoid having to confront inconsistencies among beliefs, values, or ideals (See Cognitive Dissonance Theory.)

- Influence from Mere Association – the Tendency to judge or perceive a phenomenon on the basis of its association with another phenomenon, even if that association is superficial or accidental

- Kantian Fairness Tendency – the Tendency to expect any formal process to be fair, arising from the belief that people have an inherent sense of right and wrong, and thus

- Optimism Tendency (Optimism Bias; Over-Optimism Tendency) – the Tendency to overestimate the likelihood of positive events and underestimate the likelihood of negative events

- Reason-Respecting Tendency – a Tendency to value reason and evidence in decision making, often leading one to act on incorrect reasons or suspect evidence

- Stress-Influence Tendency – the Tendency to overreact when under a lot of stress

- Twaddle Tendency (Charlie Munger, 1990s) – a tendency of individuals to spend excessive time on trivial or unproductive activities (e.g., meaningless conversations or mindlessly browsing social media)

Some Cognitive Biases, such as the Expectation Bias (see above) are associated with Psychobiological Phenomena:

- Psychobiological Phenomenon – an interaction between psychological processes (thoughts, emotions, expectations) and biological responses (brain activity, hormones, immune function) that influence health and behavior. A Psychobiological Phenomenon can trigger real physiological changes in the body. Examples include:

- Nocebo Effect (Walter Kennedy, 1960s) – adverse effects caused by the mere expectation of harm – that is, the phenomenon where negative expectations or beliefs about a treatment or condition lead to harmful or unpleasant outcomes, even if the treatment itself is inert

- Placebo Effect (Henry Beecher, 1950s) – the phenomenon where one experiences real improvements in symptoms after receiving an inactive treatment, driven by belief and expectation

Commentary

Many commentators grapple with the fact that Cognitive Biases are simultaneously characterized in both positive (e.g., as useful efficiencies) and negative terms (e.g., as often irrational and indefensible), making it difficult to categorize them as “tendencies to guard against” or “aspects of human nature to nurture.” Other commentators appreciate that such simplistic classifications are rarely appropriate when discussing something as complex as human learning – that is, such “biases” are not defects in thought processes, they are the way thinking happens.Authors and/or Prominent Influences

Amos Tversky; Daniel KahnemanStatus as a Theory of Learning

Cognitive Bias does not constitute a theory of learning. However, the notion is a critical element of Cognitive Science research. Indeed, a compelling and defensible explanation of Cognitive Bias is seen by many as a necessary criterion for a scientific theory of learning.Status as a Theory of Teaching

Cognitive Bias is not a theory of teaching. Some versions of Critical Pedagogy place considerable attention on being mindful of Cognitive Bias.Status as a Scientific Theory

Cognitive Biases have been studied extensively, with a substantial body of validated evidence associated with most identified biases.Subdiscourses:

- Anchoring Effect

- Approximation Bias

- Authority Bias (Authority Misinfluence)

- Availability Bias (Availability Heuristic; Availability Misweighing)

- Barnum Effect (Forer Effect)

- Cognitive Bias Codex

- Confirmation Bias

- Contrast-Misreaction Tendency

- Curse of Knowledge (Curse of Expertise)

- Deprival-Superreaction Tendency (Deprival Superreaction)

- Dunning–Kruger Effect (Overconfidence Effect)

- Excessive Self-Regard

- Expectation Bias

- Hawthorne Effect

- Heuristic (Cognitive Heuristic; Heuristic Technique)

- Illusion of Information Adequacy

- Illusion of Knowing

- Illusory Superiority (Above-Average Effect; Lake Wobegon Effect; Leniency Error; Primus Inter Pares Effect; Sense of Relative Superiority; Superiority Bias)

- Implicit Bias (Implicit Stereotype; Implicit Theory; Unconscious Bias)

- Impostor Syndrome (Impostor Phenomenon; Impostorism)

- Inconsistency Avoidance

- Inductive Bias

- Influence from Mere Association

- Kantian Fairness Tendency

- Lollapalooza Effect

- Nocebo Effect

- Optimism Tendency (Optimism Bias; Over-Optimism Tendency)

- Peak–End Effect (Peak–End Rule)

- Placebo Effect

- Psychobiological Phenomenon

- Reason-Respecting Tendency

- Stability Bias

- Stress-Influence Tendency

- Tendencies (Behavioral Tendencies; Psychological Tendencies)

- Training-in-Bias Techniques (Implicit Bias Training; Unconscious Bias Training)

- Training-in-Rules Techniques

- Twaddle Tendency

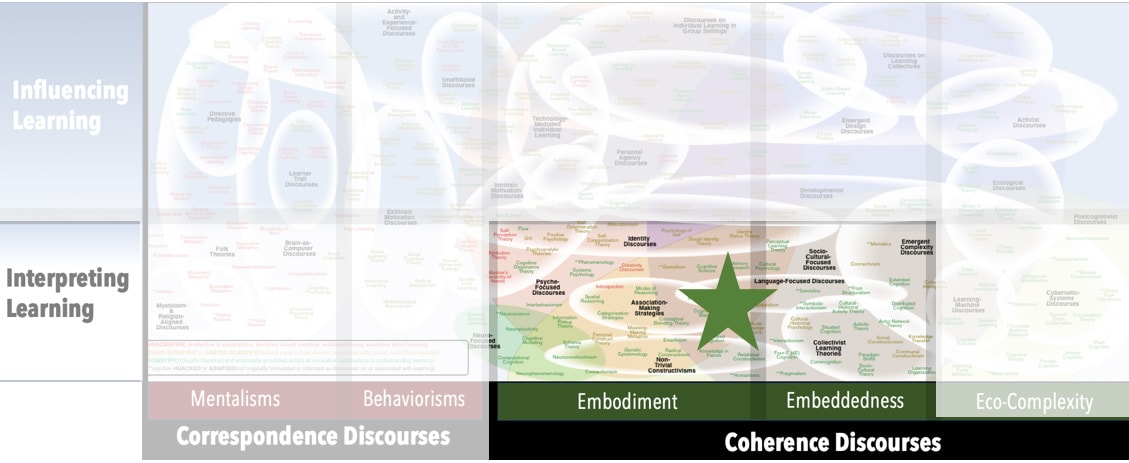

Map Location

Please cite this article as:

Davis, B., & Francis, K. (2025). “Cognitive Bias” in Discourses on Learning in Education. https://learningdiscourses.com.

⇦ Back to Map

⇦ Back to List