AKA

Assessment of Learning

Educational Measurement

Evaluation

Grading

Marking

Scoring

Focus

Interpreting student learningPrincipal Metaphors

The word “evaluation” is derived from Old French, é + value, “out + worth = appraise.” The notion was first used in commerce and later picked up as a metaphor by educators. That is, assigning a mark was originally understood as analogous to determining the worth of a product. In a similar vein, the word “assessment” is derived from the Medieval Latin assessare, “set a tax upon,” and so it originally had almost the same meaning as evaluation. As it turns out, almost all of the terms associated with formal review of student learning are anchored to this emphasis. Others include:- Grading – adapted from the practice of ranking manufactured goods according to quality (i.e., how well they meet pre-defined standards). It is derived from the Middle English gree, “step or degree in a series,” which traces back to the Latin gradus, “step.”

- Scoring – similar in meaning to grading within both education and business, it derives from the act of making notches or incisions (i.e., to signal different levels of quality). It traces back to the Proto-Germanic skura, “to cut,” which is also the root of scar and shear.

- Marking – adapted from the practice of placing prices on items for sale. The word traces back to and across a range of European languages, originally referring to specifying borders and indicating margins.

- Deficit Model of the Learner – the perspective that the learner is lacking in some manner, whether falling short in getting some thing (see Acquisition Metaphor) and/or falling short of some getting some where (see Path-Following Metaphor). Two major categories of deficit are:

- Behaviorial Deficit – failing to meet expected age-indexed behaviors

- Cognitive Deficit – performing below age-indexed expectations on mentally based tasks, as measured by standardized assessments

- Knowledge is a thing

- Learning is acquiring that thing

- Assessment and evaluation are about determining/assigning worth (of/to that thing).

Knowledge is a fluid → Learning is soaking up the fluid → Assessment is a measure of the learner’s capacity (to hold that fluid).

Knowledge is out there → Learning is internalizing → Assessment rates the accuracy of internalized representations.

Knowledge is a goal → Learning is progressing toward (and ultimately attaining) that goal → Assessment tracks achieved goals and progress toward other goals.

Knowledge is organism’s repertoire of behavior → Learning is change in behavior due to environmental influences → Assessment measures the effectiveness of rewards/punishments.

Knowledge is appropriate, situated action → Learning is enculturation, en-habiting → Assessment is gauging fitness of one’s actions.

We could go on. But we’ll assume the above details are sufficient to illustrate our main points: (1) There are multiple interpretations of assessment at play in contemporary education. (2) A few dominate, likely because they mesh with prevailing assumptions/metaphors of the nature of knowledge. These few, dominant notions are most often revealed by their uninterrogated alignment with Attainment Metaphor (evident, e.g., in educational aims, curriculum trajectories, etc.) and Acquisition Metaphor (manifest in, e.g., obsessions with learning objects/objectives and valuation of those objects). (3) More defensible conceptions are rendered difficult to understand, and even more difficult to enact, in a context dominated by just a few webs of association.Originated

Distributed across the history of schooling, but the most prominent models and modes are rooted in notions of objective measurement and standardized production that rose to cultural prominence during the Scientific and Industrial Revolutions.Synopsis

Technically, the category of Assessment and Evaluation encompasses any activity intended to monitor student learning. It can be planned or spontaneous; it can be focused on improving an individual’s learning or more generally concerned with monitoring an educational system; it can be systematic or haphazard; it can be grounded in the science of learning, or it can be beholden to inherited and indefensible assumptions. Most often, dollops of all of these elements are rolled in together, as might be inferred from the following constructs:- Assessment Design – that aspect of program planning that attends the sorts of practice tasks and evaluation tools that are useful in monitoring learning progress on an assumed-to-be-linear learning trajectory. Assessment Design is often explicitly aligned with Instructional Design Models (contrast: Design Thinking), and discussions of Assessment Design typically rely heavily on the Attainment Metaphor.

- Assessment Software – a generic category that includes any computer-based means of administering, grading, and managing examinations and other evaluation tools and tasks

- Demonstration of Learning – an umbrella terms that reaches across any means by which one might show the extent to which one has learned. Possibilities include any of the following, along with projects and relevant sorts of presentations.

- Feedback – In everyday educational usage, Feedback has come to refer to any direct commentary on one’s work. The original meaning was very different. Coined in the 1920s in the context of electronics, Feedback described when a portion of an outputted signal “fed back” into an input signal – sometimes with sufficient strength to trigger a self-amplifying loop (see Cybernetics). Applied to teaching and learning, then, Feedback can be interpreted as a critique of teacher-centered, direct instruction as it hints at the importance of learner self-determination and small-but-timely nudges from the teacher. That is, as a metaphor, Feedback can be construed as fitted to most Coherence Discourses. However, there’s no evidence the metaphor was embraced to do that sort of conceptual work.

- Performance Indicators – borrowed from business, markers and actions that can be taken as evidence that a student has learned whatever is/was hoped to be learned

- Proficiency – in the context of Assessment and Evaluation, the attainment of prespecified (“cut-off” or “minimum”) scores on some manner of formal test or observation protocol

- Proficiency-Based Learning – an umbrella term that reaches across a range of perspectives on and strategies for Assessment and Evaluation, the more common of which have titles that combine one term from each of the following two lists: List A: competency-based, mastery-based, outcome-based, performance-based. List B: assessment, education, evaluation, grading, learning, instruction.

- Formative Assessments (Low-Stakes Assessments) – Usually contrasted with Summative Assessments, Formative Assessments include all in-process evaluations of student work, generally intended to offer timely and focused feedback to support more sophisticated learning processes and products. (Most often, the Attainment Metaphor figures centrally in discussions of Formative Assessments – which is to be expected, given the alignment of Formative Assessments with notions of progress toward attaining a goal.) Associated constructs include:

- Assessment for Learning (Assessment as Learning) – typically contrasted with “assessment of learning,” an attitude (and accompanying practices) of assessing a learner’s emerging understandings in real time – that is, during the learning and teaching process – and adjusting teaching accordingly. Specific advice varies considerably, ranging from engagements that afford immediate, ongoing feedback to more routinized structures of formal monitoring. Specific strategies include:

- Buzz Session - a teaching strategy involving breaking into small groups for brief, focused discussions on a specific topic or question. Findings or conclusions are typically presented to the larger group for further discussion or synthesis.

- Hinge Question (Dylan Wiliam, 2000s) – a question posed at the “hinge” point of a lesson, usually in multiple-choice format, that is designed to afford the teacher a quick sense of what each student knows, doesn’t know, and needs to do next in relation to the topic at hand

- Mastery-Based Grading – a type of Formative Assessment, typically comprising a description of how well one has mastered a concept along with, if necessary, advice on moving to greater mastery. Specific strategies include:

- Misconception Check – instructional strategies involving the presentation of common misconceptions. Students confirm or refute them, promoting critical thinking and deeper conceptual understanding.

- Progress Monitoring (Frequent Progress Monitoring) – ongoing formal assessments of a learner that are indexed to a specific benchmarks and outcomes – and, usually, part of an explicit intervention or a learning plan. Specific strategies include:

- Circle the Questions – a strategy where students identify key questions on a worksheet or survey that they find confusing or cannot answer. Based on the results, the teacher designs follow-up activities to target the identified knowledge and comprehension issues.

- Exit Slips (Exit Tickets) – written reflections completed by students at the end of a lesson to assess their understanding, gather feedback, or encourage critical thinking. Teachers use them to gauge learning, identify misconceptions, and guide future instruction.

- Assessment for Learning (Assessment as Learning) – typically contrasted with “assessment of learning,” an attitude (and accompanying practices) of assessing a learner’s emerging understandings in real time – that is, during the learning and teaching process – and adjusting teaching accordingly. Specific advice varies considerably, ranging from engagements that afford immediate, ongoing feedback to more routinized structures of formal monitoring. Specific strategies include:

- Summative Assessments – Usually contrasted with Formative Assessments, Summative Assessments are end-point evaluations of student learning, typically expressed as “final grades,” and often high stakes. (While the notion of “end point” indicates a reliance on the Attainment Metaphor, most Summative Assessments align more strongly with the Acquisition Metaphor, through which learning is interpreted as an entity to be measured and the projects of learning are regarded as objects.)

- Progressive Assessment – (1) an approach to assessment that sits between Formative Assessments and Summative Assessments and that is intended to provide information on one’s progress toward prespecified learning outcomes by means of spaced formal assessments that are informed by previous results and that are structured to support progress toward future assessments; (2) a type of formal evaluation in which tasks/questions are modified/chosen based on one’s previous actions/responses, thus providing more precise information on one’s mastery of skills and facts. Specific types include:

- 4-Point Method – the practice of adjusting a learner’s instructional program based on their four most recent assessment results (e.g., raising the bar if fully successful, or lowering it if consistently failing)

- Benchmark Assessments (Interim Assessments) – pre-set evaluations that are administered at predetermined times during the school year to gauge learning in relation to long-term, curriculum-specified outcomes. Most often, Benchmark Assessments are understood as a sort of hybrid of Formative Assessments and Summative Assessments (see above) and they are sometimes conflated with Progress Tests (see below). (See also: Benchmark, under Attainment Metaphor.)

- Achievement Tests (Achievement Examinations) – perhaps the broadest category of assessment tools associated with formal education, encompassing any tool or activity intended to gauge levels of mastery associated with one or more programs of study, whether designed by a teacher for one-time use in a local setting or by an international body to conduct ongoing comparison studies of educational systems. Those Achievement Tests that are designed for use across multiple settings are typically grouped into two categories: (1) general batteries – that is, Achievement Tests that cover multiple academic areas, usually reaching across at least literacy, mathematics, and science; (2) specialist instruments – that is, Achievement Tests that focus on specific content areas and/or specific performance levels and/or specific vocational needs. Types that fall into the latter category, special instruments include:

- Ability Test (School Ability Test) – a formal assessment of one’s achievements and intelligence, usually with the intention of informing subsequent aspects of the learner's formal education

- Concept Inventory – Typically associated with very-well defined sets of facts or skills, a Concept Inventory is a formal, rigorous accounting of one’s mastery of specific details – most often presented in the form of a multiple-choice or other limited-response examination. (The notion of “inventory” reveals that Concept Inventory is tethered to the Acquisition Metaphor.)

- Diagnostic Tests (Diagnostic Assessments) – tests that are designed to identify and measure learning disabilities and disorders for the purpose of prescribing some manner of remediation to address a formal diagnosis. [Compare: Ability Tests, above; Achievement Tests, under Assessment and Evaluation.] Diagnostic Tests are based on the questionable assumption/analogy that difficulties with learning are like medical illnesses. Most are focused on reading/literacy; behavior and mathematics are well represented as well. For the most part, Diagnostic Tests are indexed to specific curricula and jurisdictions – which helps to explain why there are many, many options available.

- Progress Tests – Typically associated with large educational systems or multi-year programs of study, Progress Tests are examinations administered at regular intervals to gauge levels of student mastery at specific stages in well-defined programs of study. (The notion of “progress” through a well-defined program of study reveals that Progress Tests are anchored to the Path-Following Metaphor. Arguably, they are just as reliant on the Acquisition Metaphor, since proponents focus at least as much on “how much” has been learned as “how far” learners have moved.)

- Readiness Test – a formal tool to assess what one already knows in a particular domain, usually along with levels of mastery of study skills associated with that domain, in order to predict the effectiveness of a specific program of instruction. Most Readiness Tests are associated with literacy or mathematics learning and are formalized to the extent that they should be administered by credentialed specialists.

- Computer-Adaptive Tests (Tailored Testing) – formal assessments in which the sequence and difficulty of questions are influenced by the answers given (e.g., an incorrect response might trigger an easier question or one on a different topic)

- High-Stakes Test – any formal test that might open or close future opportunities (e.g., advancements, punishments, awards, compensation) for a learner, a teacher, a school, and/or an educational jurisdiction\

- Rubrics – A rubric is a grading guide, typically presented in grid form, one dimension of which is used to identify essential qualities of assigned work and the other dimension of which indexes work quality to grades. Proponents highlight that expectations are clarified for learners and fairness of grading is more transparent. Distractors counter that Rubrics often press student attitudes and efforts toward “make sure to give teachers what they want,” as opposed to, say, “engage authentically with a matter of profound interest.” Rubrics can be parsed into two main types – one or both of which, depending on how criteria and expectations are articulated, can be made to fit with almost every prominent discourse on learning:

- Holistic Rubrics – With criteria typically presented in the form of descriptive prose, Holistic Rubrics are used to offer overall, global impressions of tasks or achievements.

- Analytic Rubrics – Typically presented as checklists or grids, Analytic Rubrics correlate levels of performance with scores, enabling nuanced and weighted feedback across multiple criteria.

- Confidence-Based Learning – Focused on memorized details, Confidence-Based Learning aims to evaluate both the level of one’s mastery and one’s confidence in that mastery. Reliant on multiple-choice (or similar) tests, Confidence-Based Learning is designed to minimize the effects that guessing can have on scores. (Confidence-Based Learning is unusual in its focused and unwavering alignment with the Acquisition Metaphor, evident in its treatment of learning as measurable objects, its faith in objective measurement, and its uncritical separation of what one thinks one knows from what one actually/objectively)

- Authentic Assessment – Commonly associated with one or more Activist Discourses, an Authentic Assessmentis one that attends not just to mastery of content, but to the worthwhileness, significance, and meaningfulness of learning. It is associated with a range of learner-focused strategies. (Metaphors that link learning to empowerment or voice are common in discussions of Authentic Assessment.)

- Standards-Based Assessments (Standardized Examinations) – Often contrasted with Norm-Referenced Assessments, Standards-Based Assessments are Criteria-Referenced Assessments that are rigidly indexed to well-defined programs of study and designed to provide quantitative information on the extent to which learners have mastered the content of those programs. (Standards-Based Assessments are most prominently aligned with the Acquisition Metaphor, evident in the treatment of learning as measurable objects and the faith in objective measurement.) The best known of Standards-Based Assessments are those Achievement Tests designed to monitor and address educational matters at jurisdictional, national, and international levels. Such tests provide aggregated information that is typically indexed to and local educational conditions and often analyzed with reference to different subpopulations (e.g., cultural, or socio-economic status). Examples include:

- National Assessment of Education Progress (NAEP) – a battery of assessments intended to provide information on academic progress in the United States. Aggregate scores (i.e., not for individuals or schools, but for jurisdictions and identified subpopulations) are generated across many disciplines (reading, writing, mathematics, science, history, geography, and other topics). It is administered periodically to students at grades 4, 8, and 12.

- Programme for International Student Assessment (PISA) (Organization for Econominc Co-operation and Development, OECD) – an international assessment of achievement (in mathematics, science, and reading), problem solving, and cognition achievement. It is administered every three years (since 2000) to 15-year-olds

- Progress in International Reading Literacy Study (PIRLS) (International Association for the Evaluation of Educational Achievement, IEA, 1990s) – an international assessment of literacy achievement, indexed to information on conditions of learning for those assessed. It is administered every five years (since 2001) at the equivalent of grades 4 and 8.

- Trends in International Mathematics and Science Study (TIMSS) (International Association for the Evaluation of Educational Achievement, IEA, 1990s) – an international assessment of mathematics and science achievement, indexed to information on conditions of learning for those assessed. It is administered every four years (since 1995) at the equivalent of grades 4 and 8.

- Curriculum-Based Assessment (Curriculum-Based Evaluation) – a broad evaluation of one’s mastery of some aspect of a formal program of studies, which may include any or all of the other modes identified on this page. types include:

- Curriculum-Based Measurement (CBM) – any program of formal evaluation that regularly and systematically tracks the learner’s progress in relation to a specific program of study. CBM originated in special education, focusing on core disciplines, but it is now used across programs and subject areas. Type of CBM include:

- General Outcome Measurement – a type of Curriculum-Based Measurement that is intended to provide educators with a general sense of a learner’s progress, typically expressed as an Age Equivalent or a Grade Equivalent

- Mastery Measurement – a type of Curriculum-Based Measurement that is focused on mastery of topics or competencies that are presented in a sequence. New skills are introduced only when mastery of preceding skills in the sequence have been demonstrated.

- Curriculum-Based Measurement (CBM) – any program of formal evaluation that regularly and systematically tracks the learner’s progress in relation to a specific program of study. CBM originated in special education, focusing on core disciplines, but it is now used across programs and subject areas. Type of CBM include:

- Criteria-Referenced Assessments – Often contrasted with Norm-Referenced Assessments, Criteria-Referenced Assessments include any formal tool designed to provide information on the extent of one learning of specific content. Technically, Standards-Based Assessments are Criteria-Referenced Assessments – although, unlike the former tend to be standardized and large scale, while the latter includes quizzes prepared by teachers, tests prepared by textbook publishers, and so on. (Criteria-Referenced Assessments are typically associated with the Acquisition Metaphor, evident in the treatment of learning as measurable object(ive)s.)

- Norm-Referenced Assessments – Often contrasted with Standards-Based Assessments and Criteria-Referenced Assessments, Norms-Referenced Assessments present information on learners’ mastery of content in terms of rankings relative to one another, rather than in terms of measures of achievement. Norm-Referenced Assessments rely mainly on the Attainment Metaphor, as learning is typically interpreted in terms of progress along a defined trajectory – based on which it makes sense to rate learners against one another:

- Age Equivalent (Age-Equivalent Score; Test Age) – an indication of learning progress, expressed in terms of the average age at which the earned score is attained

- Grade Equivalent (Grade Norm; Grade Scale; Grade Score) – an indication of learning progress, expressed in terms of average grade-level performance (e.g., one performing at the level of a typical 5th-grade student would be assigned a Grade Equivalent of 5)

The following is a small sampling of some of the more prominent, non-discipline-specific Norm-Referenced Assessments:

-

- Wide Range Achievement Test, Fifth Edition (WRAT5) (Sidney W. Bijou, Joseph Jastak; originally developed in the 1940s; most recent revision in the 2020s): a brief, group-normed, individually administered test focused on word recognition, sentence comprehension, spelling, and computation. For ages 12 to 94.

- Peabody Individual Achievement Test-Revised/Normative Update (PIAT-R/NU) (originally developed in the 1970s; revised in the 1980s; updated in the 1990s) an hour-long, individually administered test that yields nine scores (General Information, Reading Recognition, Reading Comprehension, Total Reading, Mathematics, Spelling, Total Test, Written Expression, and Written Language). For ages 5 to 22.

- Woodcock–Johnson Tests of Achievement, Fourth Edition (WJ IV ACH) (Richard Woodcock, K. S. McGrew, N. Mather; originally developed in 1970s with the Woodcock–Johnson Psychoeducational Battery, most recently revised in 2010s): an individually administered test, comprising 11 subtests in the standard battery and 11 more in the extended battery. It is designed to assess both academic achievement and cognitive development, and it includes measures of skills in reading, writing, oral language, mathematics, and academic knowledge. For ages 2 to 80+.

- Kaufman Test of Educational Achievement, Third Edition (KTEA-3) (Alan S. Kaufman, Nadeen L. Kaufman; originally developed in the 1990s; most recent revision in the 2010s): an individually administered test to identify both achievement gaps and learning disabilities. It comprises 19 subtests across core academic skills in reading, mathematics, written language, and oral language. For ages 4 to 25.

-

Wechsler Individual Achievement Test, Second Edition (WIAT-II) (David Wechsler; originally developed in the 1990s, revised in the 2000s): an individually administered test used to assess academic achievement across any or all of four areas (Reading, Math, Writing, Oral Language). For ages 4 to 85.

- Holistic Grading (Global Grading; Impressionistic Grading; Nonreductionist Grading; Single-Impression Scoring) is a method of evaluating essays and other compositions, based on overall quality and global impressions – usually in comparison to an exemplar of some sort. Holistic Grading is used in both classroom-based work and large-scale assessments.

- Differentiated Assessments – Associated with Differentiated Instruction, Differentiated Assessments refer to any strategy developed, adopted, or adapted by a teacher to make sense of each individual student’s current level of mastery and consequent needs. (See Differentiated Instruction for the cluster of metaphors most often associated with the discourse.)

- Portfolio Assessments – Often positioned as an alternative to testing, and in an explicit move to foreground quality or quantity (and quantification), Portfolio Assessments are curated collections of artefacts that are intended to afford insight into learner growth over time. (Portfolio Assessments treat learning in terms of growth and development, and they are thus more frequently associated with Coherence Discourses.)

-

Electronic Portfolio (e-Portfolio; ePortfolio) – a portfolio comprising digital and/or digitized items, which are often more varied, more dynamic, and more integrated than the artifacts collected in a physical portfolio. Typical items include text files, images, videos, and blog entries – any of which might be hyperlinked any other, as well as to items outside the portfolio.

-

- Peer Assessment – As the phrase suggests, Peer Assessment involves students in judging the performance of one another. The notion is not well theorized or defined – and, consequently, associated practices span various spectra of sensibilities. For instance, Peer Assessment may be focused on either formative feedback or summative evaluations, it may be framed as a process based on caring and support or as the objective application of defined taxonomies. And so on. (Given the range of practices and sensibilities associated with Peer Assessment, it cannot be interpreted in terms of singular or prominent clusters of notions.)

- Performance Assessment (Performance-Based Assessment; Alternative Assessment; Authentic Assessment) – Understood in most general terms, a Performance Assessment is a non-standardized task that presents an opportunity for one to demonstrate a competence in a manner that permits a summative interpretation according to pre-specified criteria. Performative Assessments tend to be contextualized and to be practical or applied in nature. Other aspects (e.g., individual vs. group; close-ended vs. open-ended) vary. (Performative Assessment techniques are usually associated with Coherence Discourses – that is, fitted to understanding learning in terms of construing, mastering, and participating, and learners as active agents and interactive participants.)

- Self-Assessment – As the term suggests, Self-Assessment is about involving students in evaluating their own work. Principally, advice and commentaries focus on the positive impacts on learners’ awareness of task requirements, their own effort, and their own understandings. Self-Assessment has been shown to be strongly correlated with improved achievement. (While described in different ways, most commentaries on Self-Assessment are strongly aligned with the Attainment Metaphor, especially notions of progress, awareness of location, and pacing.)

- Dynamic Assessment – strongly associated with Socio-Cultural-Focused Discourses, especially those that highlight Apprenticeship, Dynamic Assessment involves learners in providing feedback to one another, structured in a manner that gradually positions more advanced learners as mentors and coaches as they move closer to the center of the community

- Educational Quotient – an analogue to Intelligence Quotient (see Medical Model of (Dis)Ability), but indexed to the construct of “educational achievement” rather than the construct of “innate intelligence.” Mathematically, Educational Quotient is the ratio of Educational Age (see below) to chronological age, stated out of 100:

- Educational Age – the age at which one’s level of mastery of school-based competencies is typically achieved. Most of the above-mentioned tools are designed to generate the Educational Age of the person tested.

- Future-Authentic Assessment (Phillip Dawson, 2020s) – an attitude toward assessment that is attentive to the accelerating paces of knowledge evolution, and consequently considers current realities of/in a discipline alongside “likely future realities”

- Student Growth Measure – a summary of a student’s achievement and development, expressed in quantified terms (and used more often for the evaluation of teacher performance than for structuring the student’s experiences)

- Value-Added Measures (Growth Measures; Value-Added Scores) – borrowed from economics, a quantification of a teacher’s influence on student learning that is used in considerations of salary increments, ongoing employment, and possible promotions

Commentary

Arguably, information on what educators really believe about learning is on fullest display in assessment practices. Within formal education, more often than not, assessment is the tail that wags the dogs of curriculum content and teaching practice. Phrased differently, assessment exerts a powerful influence on both explicit descriptions and implicit understandings of “learning” and “learners” – and, most often, these tendencies press in the direction of reducing or ignoring the complexity of learning and learners. The small subset of discourses that swirl around the topic, then, could be argued to be disproportionately impactful. Quick senses of the issues and problems that swirl around narrow emphasis in Assessment and Evaluation can be illustrated by such principles and constructs as:- Campbell’s Law (Donald Campbell, 1970s) – an adage out of sociology about the inevitable misuse of quantitative social indicators. In education, Campbell’s Law is often interpreted in terms of test scores and funding (or teacher salaries) in the suggestion that, when those are linked, schooling will be more geared to test-taking than to well-rounded education.

- Fadeout (fadeout; Preschool Fadeout) – the tendency for observed differences in student achievement (e.g., as measured by Norm-Referenced Assessments; see Assessment and Evaluation) among young children to level out in the first years of schooling, whether or not they attended preschool. There is much debate on the construct, which is often argued to be a myth and/or dismissed – sometimes on the basis of conflicting research results, sometimes as misinterpreted dynamics (e.g., learners with an early educational advantage may be boosting their peers), and sometimes as reductionist misreadings (i.e., focusing only on cognitive processes and ignoring social, economic, and other advantages associated with preschool attendance).

- Volvo Effect (Peter Sacks, 2020s) – a cynical nod to the fact that standardized texts correlate more strongly to the income and education of one’s parents (suggested to be evident in the type of car they drive) than to one’s academic and career success

- Washback Effect (1980s) – a reference to any influence that formal testing might have on teaching practice, curriculum design, and student behavior

Authors and/or Prominent Influences

DiffuseStatus as a Theory of Learning

In a sense, most Assessment and Evaluation are anti-theoretical. That is, they are encountered as end-products of belief systems – the inevitable consequences of assumptions and assertions that have already been embraced.Status as a Theory of Teaching

Assessment and Evaluation are not often described as “theories of teaching,” but there is widespread recognition that these discourses have powerful shaping influences of what happens in classrooms.Status as a Scientific Theory

For the most part, Assessment and Evaluation can serve as case studies of what happens when grounding assumptions about learning are ignored. That is, on the whole, Assessment and Evaluation cannot be described as scientific – despite the fact that massive examination-producing and data-analysis industries have arisen around formal testing and student ranking.Subdiscourses:

- Achievement Tests (Achievement Examinations)

- Age Equivalent (Age-Equivalent Score; Test Age)

- Analytic Rubrics

- Assessment Design

- Assessment for Learning (Assessment as Learning)

- Assessment Software

- Authentic Assessment

- Behaviorial Deficit

- Benchmark Assessments (Interim Assessments)

- Buzz Session

- Campbell’s Law

- Circle the Questions

- Cognitive Deficit

- Computer-Adaptive Tests (Tailored Testing)

- Concept Inventory

- Confidence-Based Learning

- Criteria-Referenced Assessments

- Curriculum-Based Assessment (Curriculum-Based Evaluation)

- Curriculum-Based Measurement

- Deficit Model of the Learner

- Demonstration of Learning

- Diagnostic Tests (Diagnostic Assessments)

- Differentiated Assessments

- Dynamic Assessment

- Educational Age

- Educational Quotient

- Electronic Portfolio (e-Portfolio; ePortfolio)

- Exit Slips (Exit Tickets)

- Fadeout (fadeout; Preschool Fadeout)

- Feedback

- Formative Assessments (Low-Stakes Assessments)

- 4-Point Method

- Future-Authentic Assessment

- General Outcome Measurement

- Grade Equivalent (Grade Norm; Grade Scale; Grade Score)

- High-Stakes Test

- Hinge Question

- Holistic Grading (Global Grading, Impressionistic Grading, Nonreductionist Grading, Single-Impression Scoring)

- Holistic Rubrics

- Kaufman Test of Educational Achievement, Third Edition (KTEA-3)

- Mastery-Based Grading

- Mastery Measurement

- Misconception Check

- National Assessment of Education Progress (NAEP)

- Norms-Referenced Assessments

- Peabody Individual Achievement Test-Revised/Normative Update (PIAT-R/NU)

- Peer Assessment

- Performance Assessment (Performance-Based Assessment; Alternative Assessment; Authentic Assessment)

- Performance Indicators

- Portfolio Assessments

- Proficiency

- Proficiency-Based Learning

- Programme for International Student Assessment (PISA)

- Progress in International Reading Literacy Study (PIRLS)

- Progress Monitoring (Frequent Progress Monitoring)

- Progress Tests

- Progressive Assessment

- Readiness Test

- Rubrics

- Self-Assessment

- Standards-Based Assessments (Standardized Examinations)

- Student Growth Measure

- Summative Assessments

- Trends in International Mathematics and Science Study (TIMSS)

- Value-Added Measures (Growth Measures; Value-Added Scores)

- Volvo Effect

- Washback Effect

- Wide Range Achievement Test, Fifth Edition (WRAT5)

- Woodcock–Johnson Tests of Achievement, Fourth Edition (WJ IV ACH)

- Wechsler Individual Achievement Test, Second Edition (WIAT-II)

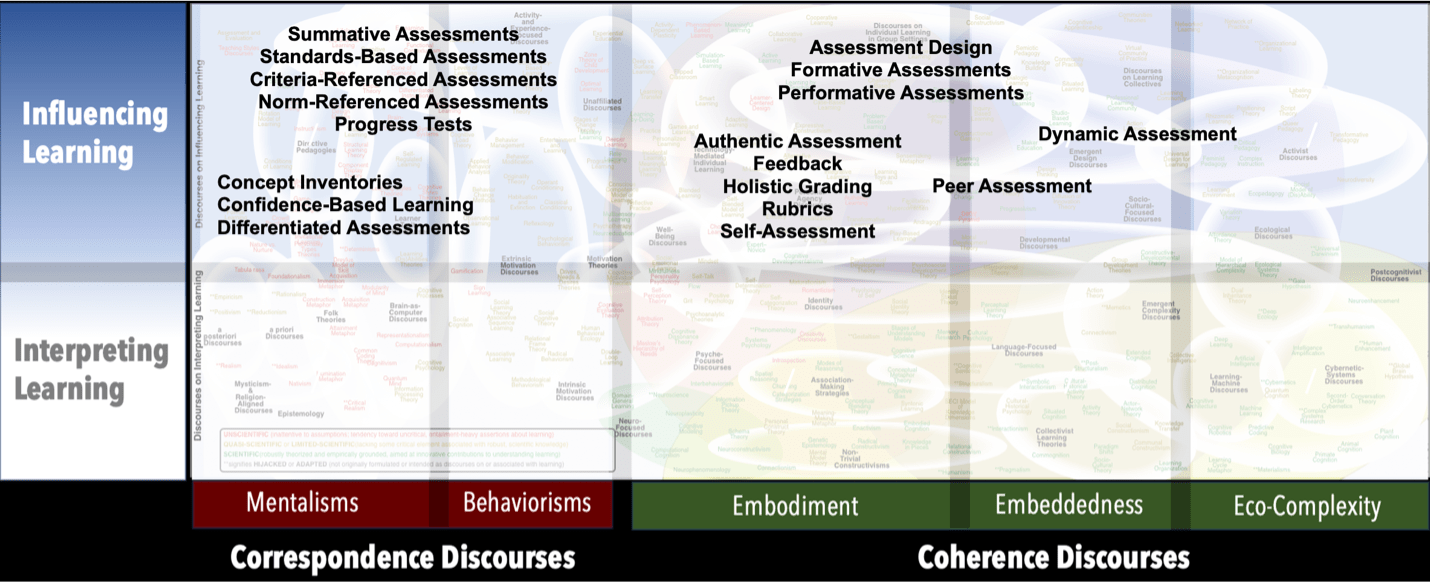

Map Location

Please cite this article as:

Davis, B., & Francis, K. (2025). “Assessment and Evaluation” in Discourses on Learning in Education. https://learningdiscourses.com.

⇦ Back to Map

⇦ Back to List